Five Questions to Prepare Your Snow Removal Operations Before the First Flake

A Simple Framework to Move from Reactive Scrambling to Strategic Control During Winter Storms

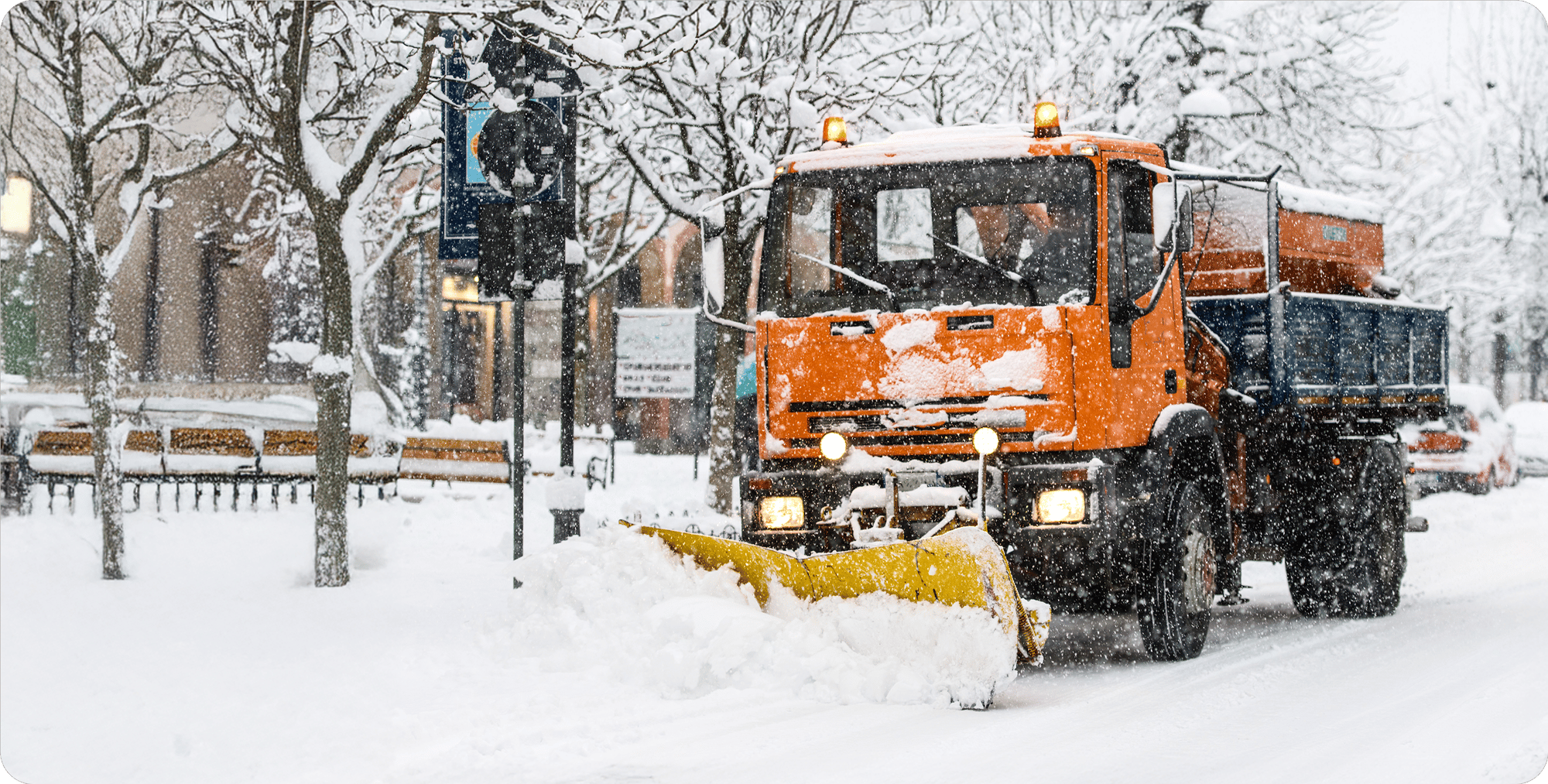

It's 4 AM. Six inches of snow have blanketed your Midwest locations. Your phone lights up with store managers reporting unplowed lots, customers slipping on ice, and vendors who aren't answering. Do you know which vendor covers which site? What triggers emergency dispatch? Who can approve premium rates when your primary contractor doesn't show?

Season after season, most multi-location operators scramble to find last year's vendor contracts, argue about what "plowed" actually means, and discover that the person who "handled snow stuff" left the company in July.

The best-run operations managing hundreds of locations for brands like Cumberland Farms and Marathon have answered five critical questions before the first forecast. The difference isn't budget size or vendor network. It's clarity.

Question 1: Do You Have a Documented Process for Snow Removal Dispatch?

When snow starts falling across your territory, does your team know:

-

Which vendors cover which locations?

-

What triggers dispatch—1 inch? 2 inches? Ice accumulation?

-

Who authorizes the vendor call?

-

What information you must collect and log before, during, and after service?

-

Who receives notification when a vendor doesn't respond or complete service?

-

What escalation path to follow when your primary vendor is unavailable?

If these answers live in someone's head or in an email thread from last February, you're improvising every storm. When vendors face hundreds of service calls during the same weather event, they prioritize operations with clear expectations. The ones calling around confused get put at the back of the line.

Question 2: Can You Define What "Plowed and Salted" Actually Means for Each Location?

"Get the lot cleared" sounds straightforward until you realize ten different people have ten different definitions. Does "cleared" mean:

-

Plowed to the curbs or just main traffic lanes?

-

All pedestrian walkways from parking to entrance?

-

Fuel islands and areas around dispensers?

-

Salt or ice melt applied to all walking surfaces?

-

Snow pushed to designated areas only (avoiding no-plow zones)?

Gas stations and convenience stores have unique layouts that make generic snow contracts dangerous, creating real safety risks if executed incorrectly. Fuel islands sit over underground tanks. Canopies have support columns. Tank fill ports and utility access points can't be buried under snow piles. Without site-specific plow maps showing no-plow zones, approved snow dump areas, and priority pathways, you leave a plow operator at 5 AM to make those calls. And if they guess wrong, you're dealing with damaged infrastructure, inaccessible fuel systems, or worse, safety incidents.

Question 3: Who Owns What When Weather Hits?

When a regional snow event hits dozens of locations simultaneously, role clarity separates coordinated response from expensive chaos. When vendors are running behind, stores are calling corporate, and routine maintenance still needs attention, your team needs to know:

-

Who monitors weather forecasts and triggers pre-storm preparation?

-

Who has authority to approve emergency vendor calls at premium rates?

-

Who communicates with store operations about delays or closures?

-

Who decides to defer non-emergency maintenance during active storms?

-

Who tracks vendor no-shows and initiates backup vendor dispatch?

-

Who documents storm response for post-season vendor performance reviews?

The most expensive decisions in snow management are the ones nobody realizes they're supposed to make, until three stores are closed because nobody approved the emergency vendor at double rate.

Question 4: How Do You Onboard Someone Into Your Snow Operations?

Here's the real test: If you hired someone today, how long would it take them to manage snow removal across your locations independently? A week? A month? "They'd have to go through a winter first"?

Snow season lasts only a few months in most regions. You can't afford a full season of apprenticeship. If your answer involves phrases like "they'll learn as they go" or "they'll shadow during the first few storms," you don't have a process, you have tribal knowledge. Turnover is brutal in facilities management, and snow season comes just once a year. You can't spend three months getting someone productive.

The best-run operations can hand someone a snow response playbook and have them managing storms within days, not seasons.

Question 5: What Does "Completed Successfully" Look Like Specifically?

When a vendor marks a snow removal work order as complete, what should be documented? What photos are required? What follow-up happens if quality doesn't meet standards? How do you track patterns of late service or incomplete work across vendors and locations?

Vague expectations create vague results and impossible accountability. You can't hold vendors to standards that were never defined. Operations holding contractors accountable "like never before" don't rely on forceful phone calls, they enforce documented standards, photo requirements, and completion criteria that everyone understood before the first storm.

And when spring comes and you're deciding whether to renew contracts or rebid services, you need performance data, not opinions about who 'seemed pretty good this year.' Your documentation determines whether a seasonal contract or per-push pricing makes sense for next year.

From Questions to Action

If you found yourself hedging on any of these questions, you're not alone. Most maintenance departments enter snow season the same way they've always done it: "we'll figure it out when it snows." Until the person who "always handled it" is gone, or a major storm exposes that nobody actually knows who's supposed to do what.

Winter is coming. The question isn't whether you'll face storms, it's whether your team will handle them with confidence and clarity, or with the same scrambling and confusion as every year before.

The operators who answer these five questions before the first forecast don't just survive snow season. They control it.

THE PROBLEM:

Knowledge That Lives in Heads, Not Documents

You just worked through the five questions. Maybe you sat down with your team and talked through each one. Maybe you even typed out answers. You know how your snow removal operation should work.

But here's what happens next in most organizations: someone volunteers to "write it all up." Three weeks later, they've documented the vendor list and maybe the dispatch triggers. The role clarity section is half-finished. The site-specific requirements are "still being gathered." By the time snow actually hits, you're back to the same improvised chaos because the document never got finished.

Or worse, someone does finish it. They spend forty hours writing a comprehensive snow removal manual. It's thorough. It's detailed. And it's immediately outdated because they documented the process exactly as it existed in October, but by December, two vendors changed, you adjusted your trigger points after the first storm, and nobody has time to update the document.

The person who knows how your snow operation runs can't clone themselves. And they definitely can't spend a week every fall writing documentation that's obsolete before the first plow hits pavement.

THE FRAMEWORK:

Why AI Changes Everything About Process Documentation

Here's the reality, you already have the knowledge. You answered the five questions. You probably recorded a conversation where your team talked through every scenario, every decision point, every vendor contact. Or you typed out detailed responses. That information exists.

The problem was never having the knowledge, it was turning that knowledge into a structured, usable process document that someone could actually follow. That's the part that took weeks and never quite got finished.

AI solves that specific problem. You can take your interview transcript, your typed answers, or even your rambling notes from a team meeting, feed it into an AI tool, and get a structured process document back in minutes. Not perfect but 90% complete, properly formatted, with logical flow and clear decision points.

The goal isn't to have AI write your process for you. The goal is to have AI structure the knowledge you already articulated into a document that's immediately useful. You'll still need to refine it, add company-specific details, and adjust for your exact operation. But you're starting from 90% instead of staring at a blank page.

What Makes a Good Snow Removal Process Document?

Before we get into the actual prompt, you need to know what you're aiming for. A good snow removal process document includes:

1. Clear trigger points and decision trees.

Not "plow when it snows" but "dispatch at 2 inches of accumulation during business hours, 1.5 inches overnight or during forecasted ice events, with escalation to emergency vendors if primary contractor hasn't confirmed arrival within 90 minutes of dispatch."

2. Specific vendor assignments and contact protocols.

Which vendor covers which locations, who's the primary contact, who's backup, what information gets logged when you dispatch, and what happens when they don't answer.

3. Escalation paths when things go wrong.

Your primary vendor doesn't show. Your backup vendor is overwhelmed. A plow hits a bollard. A store manager calls corporate screaming. Who does what, in what order, and who has authority to make decisions?

4. Documentation requirements at each step.

What photos do you require for completed work orders? What information needs logging in your CMMS? How do you track vendor arrival times, completion times, and any issues encountered?

5. Site-specific considerations.

No-plow zones for each location type. Priority clearing paths (main entrance before side parking). Special requirements for fuel islands, underground tanks, utility access points.

If your process document has those five elements, someone can pick it up and manage a storm without constantly asking you what to do next.

THE PROMPT:

What to Feed the AI

Here's the prompt structure that takes your interview transcript or typed answers and generates a usable snow removal process document. You can copy this, adjust the bracketed sections for your specifics, and use it with any AI tool (Claude, ChatGPT, or whatever you prefer).

The Prompt:

I need you to create a comprehensive snow removal operations process document for a facilities maintenance department managing [NUMBER] convenience store/gas station locations across [REGIONS/STATES].

I'm going to provide you with either:

-

A transcript of a conversation where our team discussed how we handle snow removal operations, OR

-

Written answers to key questions about our snow removal process

Your job is to take that raw information and structure it into a clear, actionable process document that someone new to our operation could use to manage snow removal independently.

Required sections for the document:

1. Weather Monitoring and Pre-Storm Preparation

-

How we monitor forecasts and what sources we use

-

Trigger points for different actions (monitoring vs. pre-positioning vs. active dispatch)

-

Pre-storm communication protocols (who gets notified, when, with what information)

-

Vendor readiness verification steps

2. Dispatch Decision-Making and Execution

Specific trigger points for dispatch (accumulation thresholds, ice conditions, timing considerations)

-

Who has authority to authorize dispatch and under what conditions

-

Step-by-step dispatch protocol (what information to gather, how to contact vendors, what to log)

-

How we handle simultaneous storms across multiple regions

3. Vendor Management During Active Storms

-

Which vendors are assigned to which locations (create a clear mapping)

-

Primary contact protocols and backup contacts

-

Expected response times and arrival confirmation procedures

-

What to do when vendors don't respond or can't meet commitments

-

Emergency escalation process and backup vendor activation

4. Service Standards and Site-Specific Requirements

-

Definition of "complete" service for our locations

-

Priority areas that must be cleared (fuel islands, main entrances, pedestrian paths)

-

No-plow zones and restricted areas (tank fill ports, utility access, specific landscaping)

-

Salt/ice melt application standards

-

Any location-specific variations or special requirements

5. Documentation and Quality Verification

-

Required photos and timestamps for work order completion

-

What information must be logged in our CMMS/work order system

-

Quality verification steps (who checks, what they look for, what happens if service is incomplete)

-

How we track vendor performance during storms

6. Post-Storm Process and Damage Documentation

-

Post-storm inspection requirements

-

How to document and report plow damage (bollards, curbs, signage, landscaping)

-

Process for addressing incomplete service or quality issues

-

Communication with store operations after storms

7. Role Assignments and Communication Protocols

-

Who owns each phase of the storm response

-

Decision-making authority at each level

-

Communication flow during active storms (who updates whom, how often, through what channels)

-

Escalation paths when problems arise

Formatting requirements:

-

Use clear headers and subheaders for easy navigation

-

Include specific decision points with "IF/THEN" logic where appropriate

-

Call out any gaps where we need to add more specific information

-

Use bullet points for lists and steps, but full paragraphs to explain reasoning or context

-

Flag any areas where our current process seems unclear or incomplete

What to avoid:

-

Don't make up details we didn't provide

-

Don't use generic facilities management jargon—use our specific terminology

-

Don't create overly complicated flowcharts or diagrams (stick to clear written process)

-

Don't assume standard industry practices unless we specifically mentioned them

Input to process: [PASTE YOUR INTERVIEW TRANSCRIPT OR TYPED ANSWERS HERE]

Create a process document that's immediately usable but also identifies where we need to add more detail or make decisions we haven't clarified yet.

What to Include With the Prompt

Along with the prompt itself, you need to provide the raw material. This is either:

Option 1: Your Interview Transcript

If you recorded a conversation where you and your team talked through the five questions, get that audio transcribed. Most AI tools can transcribe directly, or you can use a service like Otter.ai or Rev. The transcript doesn't need to be perfectly clean. AI can work with conversational language, tangents, and even incomplete thoughts. Just make sure the core information from your five-question discussion is captured.

Option 2: Your Typed Answers

If you wrote out answers to the five questions, that works just as well. Don't worry about perfect formatting or complete sentences. The AI will structure it. Just make sure you've addressed:

-

Who does what when weather hits

-

What your dispatch triggers are and who authorizes dispatch

-

Which vendors cover which locations

-

What "complete" service means for your locations

-

What gets documented and how

Optional Additions: If you have any existing documentation even outdated or incomplete, include it. Vendor lists, old contracts with service specifications, site maps, previous work order templates. The AI can incorporate that information into the new process document, which saves you from retyping details that haven't changed.

EXAMPLE:

What Good Output Looks Like

Let's look at what happens when you feed the prompt properly. Here's a before and after.

Before: Raw Interview Transcript Snippet

"So basically when we see snow coming, somebody needs to check the forecast, probably the night before. We usually look at Weather.com or sometimes the Weather Channel app. If it's gonna be more than like 2 inches we call the vendors. Well, sometimes 2 inches, sometimes less if it's gonna be during the morning rush. Really depends. For the Midwest stores that's Jim's Plowing and for the Northeast we've got three vendors I think? Wait, two now because we dropped that one guy last year. Anyway, we call them and make sure they're ready. Sometimes they don't answer right away which is annoying. We should probably have a backup for that..."

After: Structured Process Document Output

Weather Monitoring and Pre-Storm Preparation

The maintenance coordinator on duty monitors weather forecasts for all operating regions beginning 48 hours before any forecasted snow event. Primary forecast sources include Weather.com and The Weather Channel mobile app.

Monitoring trigger points:

-

48 hours before forecasted snow: Begin daily forecast checks

-

24 hours before forecasted snow: Verify vendor availability and contact information

-

12 hours before forecasted snow (if 2+ inches predicted): Initiate vendor pre-positioning communication

Vendor notification protocol: The coordinator contacts vendors once accumulation forecasts meet the following thresholds:

-

2+ inches during standard hours (10 AM - 8 PM)

-

1.5+ inches during morning rush (5 AM - 10 AM)

-

Any ice accumulation regardless of snow depth

Current vendor assignments:

-

Midwest region: Jim's Plowing (Primary contact: Jim Reynolds, 555-0123)

-

Northeast region: [NEED TO CLARIFY - mentioned "two vendors now" but specific companies and contacts not provided]

Gap identified: No backup vendor contact process defined for when primary vendors don't respond. Current process: "Sometimes they don't answer right away which is annoying."

Action needed: Define maximum acceptable response time and identify backup vendors for each region.

See what happened?

The AI took conversational, incomplete information and:

-

Created logical structure - Organized scattered thoughts into clear sections with headers

-

Identified decision points - Pulled out the specific thresholds (2 inches, 1.5 inches, timing considerations)

-

Assigned ownership - Made clear who's responsible ("the maintenance coordinator on duty")

-

Flagged gaps - Called out where information was incomplete or unclear

-

Preserved specifics - Kept exact details like vendor names and threshold numbers

What the AI Did Well:

The output turned "somebody needs to check" into "the maintenance coordinator on duty monitors," which provides the specificity you need for process documentation. It took rough timing considerations ("probably the night before") and created a 48-hour/24-hour/12-hour monitoring cadence. It caught that you mentioned dropping a vendor but didn't provide the current Northeast vendor list, and flagged it as a gap to fill.

Common Issues to Watch For and Fix:

Even with a good prompt, AI output needs human refinement. Here's what to watch for:

AI being too generic.

If the output says "contact the appropriate vendor" instead of "call Jim Reynolds at Jim's Plowing (555-0123) and confirm availability for the forecasted storm window," make it specific. Generic process documents don't help anyone.

Missing your company-specific terminology or tools.

If you use ServiceChannel or Corrigo or a custom CMMS, make sure that's reflected. If you call your maintenance coordinators "facilities dispatchers" or "VMCs," make sure the AI uses your terminology. You can add a note to the prompt: "We use [CMMS NAME] for work orders and refer to our coordination team as [ROLE NAME]."

Over-complicating simple steps.

Sometimes AI will turn "call the vendor" into a five-step communication protocol with templates and follow-up schedules. If something is straightforward, simplify it. Your process document should be clear, not bureaucratic.

Assuming standard practices you don't actually follow.

If the AI adds a step you didn't mention like "conduct post-storm debrief meetings" and you don't actually do that, remove it. Document the process you'll actually execute, not the process that sounds impressive.

HOW TO ITERATE:

Feeding the Output Back With Refinements

Your first AI-generated draft won't be perfect. That's expected. Here's how to refine it:

-

Read through the entire document and highlight gaps. Where did the AI say "NEED TO CLARIFY" or "Action needed"? Those are places where your original answers were incomplete.

-

Fill in the gaps with specific information. Write out the missing details exact vendor names, complete contact lists, specific site requirements you forgot to mention.

-

Feed it back to the AI with refinement instructions. Copy the draft document, add your new information, and give the AI a refined prompt:

"I've reviewed the snow removal process document you created. I'm providing additional information to fill gaps and correct areas that need more specificity. Please update the document by incorporating this new information while maintaining the same structure and format:

[PASTE YOUR ADDITIONS AND CORRECTIONS]

Update the document and remove any 'gap identified' or 'action needed' notes where I've now provided the missing information."

You can iterate this way 2-3 times until you have a complete, accurate process document. Each iteration takes minutes, not days.

What You Have Now

At this point, you have a complete snow removal process document. Someone could read it and understand:

-

When to start monitoring weather

-

What triggers dispatch decisions

-

Who to call and how to escalate

-

What "complete" service means

-

What needs documentation

That document is immediately more useful than the scattered knowledge that lived in your head last week. But a process document by itself doesn't execute. You still need to answer: who's doing what, specifically?

THE PROBLEM:

Process Without Ownership Is Just a Suggestion

You have a process document now. It's comprehensive. It's clear. It has everything someone needs to manage snow removal effectively.

And when the first storm hits, nobody does anything because nobody knows it's their job to do it.

This is the gap that kills most process improvement efforts. Organizations spend weeks documenting what should happen, then act surprised when it doesn't actually happen. The process document sits in a shared drive while your team operates exactly like they always have: reacting to problems after they occur, assuming someone else is handling the proactive stuff, and improvising when things go wrong.

Common failure patterns.

"Someone should be monitoring the weather." Okay, who? The facilities director checks it when they remember. The maintenance coordinator assumes the director is handling it. Meanwhile, nobody tracks the forecast consistently, and vendors don't get pre-positioned because nobody knew that was their responsibility.

"We need to approve emergency vendor dispatch." Who has that authority? When the coordinator can't reach their manager at 3 AM, can they make the call? Or do stores stay closed while they wait for approval? Nobody clarified, so the coordinator makes their best guess and sometimes they guess wrong either causing unnecessary delays or approving $10,000 in premium rates without authority.

"Vendors need to submit completion photos." Who verifies those photos actually meet standards? Who follows up when photos are missing? Who tracks patterns of incomplete documentation? If it's everyone's responsibility, it's nobody's responsibility.

Real example:

A chain managing 200+ locations had a detailed snow removal process document. When a major storm hit the Midwest, three different people dispatched vendors to the same locations (costing double), zero people verified completion photos (so they couldn't confirm service quality), and nobody documented vendor arrival times (making it impossible to hold contractors accountable for late response). They had the process. They didn't have ownership.

THE FRAMEWORK:

From Process to Ownership

There's a fundamental difference between a process and an assignment:

A process describes what happens:

"Weather forecasts are monitored 48 hours before storms, vendors are contacted when accumulation reaches 2 inches, and completion photos are verified before work orders close."

An assignment describes who does it:

"The maintenance coordinator checks forecasts daily during winter months, the facilities director authorizes dispatch for storms over 2 inches, and the coordinator verifies completion photos within 4 hours of vendor submission."

Your process document from Part 2 is full of action verbs—monitor, dispatch, approve, document, escalate, verify. Every single one of those verbs needs a specific owner. Not a department. Not "the team." A role that exactly one person fills at any given time.

WALKING THROUGH THE BREAKDOWN:

From Document to Assignment

Let's take your process document from Part 2 and turn it into actual ownership. Here's how to work through it systematically.

Step 1: Identify Every Action Verb

Go through your process document with a highlighter (literal or digital). Mark every action verb—every "monitor," "dispatch," "approve," "document," "escalate," "verify," "contact," "review," "update."

From the example document in Part 2, we'd highlight:

-

Monitor weather forecasts

-

Verify vendor availability

-

Contact vendors once thresholds are met

-

Authorize dispatch decisions

-

Log dispatch information in CMMS

-

Confirm vendor arrival

-

Track vendor no-shows

-

Initiate backup vendor escalation

-

Verify completion photos

-

Document any damage or issues

-

Conduct post-storm quality checks

That list of verbs becomes your task list. If it's not on this list, it's not getting done.

Step 2: Group Related Actions Into Role Clusters

Some actions naturally belong together because they require the same skills, happen at the same time, or need the same level of authority. Group them.

Real-time coordination tasks (need someone actively monitoring and responding):

-

Monitor weather forecasts

-

Contact vendors

-

Log dispatch information

-

Confirm vendor arrival

-

Track vendor no-shows

-

Initiate escalations

Decision authority tasks (need someone with budget approval and vendor management authority):

-

Authorize dispatch decisions

-

Approve emergency/premium rate vendors

-

Manage vendor performance issues

-

Make contract decisions based on seasonal data

Quality control tasks (need someone detail-oriented who can enforce standards):

-

Verify completion photos

-

Conduct post-storm quality checks

-

Document damage or incomplete service

-

Track documentation compliance

Communication tasks (need someone who interfaces with operations):

-

Communicate forecasts to store managers

-

Provide storm updates to corporate

-

Report completion status by region

You're not assigning these to people yet—you're just grouping similar work.

Step 3: Assign Ownership to Specific Roles

Now match those task clusters to roles. Use role titles, not names. Names change. Roles are stable.

For a typical multi-location facilities operation, assignments might look like:

Maintenance Coordinator role:

-

Monitor weather forecasts daily during winter season

-

Contact vendors when dispatch thresholds are met

-

Log all dispatch activity in CMMS with timestamps

-

Confirm vendor arrival and track response times

-

Initiate backup vendor escalation when primary vendors are unavailable or late

-

Verify completion photos meet documentation standards

-

Document any service issues or damage reports

Facilities Director/Manager role:

-

Authorize dispatch for storms meeting defined thresholds

-

Approve emergency/premium rate vendor calls

-

Review vendor performance data weekly during active season

-

Communicate with operations leadership on major storm events

-

Make contract renewal decisions based on seasonal performance data

Store Operations (informed/consulted, not responsible):

-

Receive forecast notifications for their regions

-

Report any service quality issues to maintenance coordinator

-

Provide site access and support as needed

Notice what's different here: every task has exactly one role that owns it. The coordinator doesn't need to ask permission to contact vendors once thresholds are met—that's their responsibility. But they can't authorize premium rates—that's the director's responsibility. Clean lines.

Step 4: Identify Which Tasks Need Detailed SOPs

Go back through your assignments. For each task, ask: "Could someone new to this role execute this task with just the basic assignment, or do they need detailed instructions?"

Tasks that DON'T need SOPs:

-

Log dispatch information in CMMS (straightforward data entry)

-

Confirm vendor arrival (simple check-in call or message)

-

Monitor weather forecasts (just check the forecast source daily)

Tasks that DO need SOPs:

-

Authorize dispatch for storms meeting defined thresholds (requires judgment on accumulation, timing, forecast confidence)

-

Initiate backup vendor escalation (requires decision tree: when to escalate, who to contact, what information to provide)

-

Verify completion photos meet documentation standards (requires specific criteria for what's acceptable)

The tasks that need SOPs are usually the ones involving "if/then" decision-making or quality judgment.

EXAMPLE SOP:

Deciding When to Dispatch Vendors

Let's build a complete SOP for one of the most judgment-intensive tasks in snow removal.

SOP: Snow Removal Vendor Dispatch Decision

Purpose: This procedure defines how maintenance coordinators decide when to dispatch snow removal vendors and what information to gather before making that decision.

When to use: Review this procedure when any forecasted snow event appears in your monitoring regions within the next 48 hours.

Responsible role: Maintenance Coordinator

Inputs required before making dispatch decision:

-

Current accumulation forecast from Weather.com (check within 4 hours of decision)

-

Forecast confidence level (winter storm watch vs. warning vs. advisory)

-

Current vendor availability confirmation (if within 24 hours of forecasted event)

-

Store operating hours for affected locations

-

Current vendor service queue status (during active storm periods)

Decision matrix:

Standard dispatch (primary vendors, standard rates):

-

2-4 inches accumulation during business hours (10 AM - 8 PM) + winter storm watch or warning

-

2-4 inches accumulation overnight/early morning (8 PM - 10 AM) + winter storm watch or warning

-

Any ice accumulation forecast (regardless of snow depth)

Priority dispatch (expedited timing, may require premium rates):

-

4+ inches accumulation any time

-

Ice accumulation + morning rush timing (5 AM - 10 AM forecast)

-

Any accumulation + store-specific emergency request from operations

Monitor only (no dispatch yet):

-

1-2 inches forecast with winter weather advisory only

-

Forecast more than 48 hours out with low confidence

-

Flurries or light snow with no accumulation forecast

Special considerations:

If forecast is borderline (1.5-2 inches):

-

Check forecast trend (increasing or decreasing prediction)

-

Consider timing (morning rush = lower threshold, overnight = higher threshold)

-

Review regional patterns (does this forecast source typically over/under-predict for this region?)

-

When in doubt, consult with facilities director before committing vendors

If primary vendor unavailable:

-

Immediately contact backup vendor and confirm availability

-

Notify facilities director if backup also unavailable—emergency escalation required

-

Do not delay dispatch decision waiting for primary vendor response beyond 2 hours

If forecast changes significantly after dispatch:

-

Increases to 6+ inches: Notify vendor of updated forecast, confirm they can still service all locations, alert operations of potential delays

-

Decreases below dispatch threshold: Contact vendor to cancel or reduce scope if more than 12 hours before event, otherwise proceed as planned

Outputs and documentation:

After dispatch decision, log in CMMS:

-

Dispatch authorization timestamp

-

Accumulation forecast and source

-

Vendor confirmation time

-

Expected service window

-

Any special instructions or priority locations

Notify:

-

Vendor: confirm dispatch, provide service window, specify any priority locations

-

Store operations: expected vendor arrival window for their regions

-

Facilities director: dispatch summary if 4+ locations affected or emergency rates required

Set follow-up:

-

Calendar reminder for vendor arrival confirmation (2 hours before expected service window)

-

Weather forecast check (4 hours before event to catch any significant changes)

Common questions:

Q: What if I'm not sure whether to dispatch?

A: If accumulation forecast is between 1.5-2 inches or forecast confidence is low, consult with facilities director. Document the consultation and decision in CMMS. It's better to ask than to guess wrong.

Q: Can I dispatch outside these thresholds if a store manager requests it?

A: Store managers can request emergency snow removal, which triggers priority dispatch. Document the request and requesting manager name. If it's outside normal thresholds and not clearly justified, loop in facilities director before committing vendors.

Q: What if the vendor says they'll try to get there but can't commit to timing? A: That's not confirmation—that's a soft no. Move immediately to backup vendor and notify facilities director. We need committed service windows, not best efforts.

This SOP doesn't just say "decide when to dispatch vendors." It provides specific thresholds, handles edge cases, tells you what to do when things go wrong, and answers the questions coordinators actually ask when they're new to the role.

That's the level of detail you need for SOPs. Not for everything—just for the tasks that require judgment or have multiple decision points.

Common SOP Mistakes to Avoid

Too vague:

"Use good judgment to determine if conditions warrant vendor dispatch." That's not an SOP. That's an instruction to guess.

Too rigid:

"Follow these 23 steps in exact order with no deviation regardless of circumstances." Nobody will follow that. Reality is messier than 23-step procedures.

No clear owner:

"The team will evaluate forecast conditions and coordinate vendor response." Who, specifically? One person needs to own the decision.

Doesn't address exceptions:

SOPs that only cover perfect scenarios are useless. The SOP needs to tell you what to do when the vendor doesn't answer, when the forecast changes, when operations is screaming for emergency service.

Not maintained:

You write an SOP in October. By January, you've learned three important exceptions that should be in the SOP but aren't. Now people are following outdated instructions or ignoring the SOP entirely. SOPs need an owner who updates them when reality reveals gaps.

Common Issues to Watch For and Fix:

Even with a good prompt, AI output needs human refinement. Here's what to watch for:

AI being too generic.

If the output says "contact the appropriate vendor" instead of "call Jim Reynolds at Jim's Plowing (555-0123) and confirm availability for the forecasted storm window," make it specific. Generic process documents don't help anyone.

Missing your company-specific terminology or tools.

If you use ServiceChannel or Corrigo or a custom CMMS, make sure that's reflected. If you call your maintenance coordinators "facilities dispatchers" or "VMCs," make sure the AI uses your terminology. You can add a note to the prompt: "We use [CMMS NAME] for work orders and refer to our coordination team as [ROLE NAME]."

Over-complicating simple steps.

Sometimes AI will turn "call the vendor" into a five-step communication protocol with templates and follow-up schedules. If something is straightforward, simplify it. Your process document should be clear, not bureaucratic.

Assuming standard practices you don't actually follow.

If the AI adds a step you didn't mention like "conduct post-storm debrief meetings" and you don't actually do that, remove it. Document the process you'll actually execute, not the process that sounds impressive.

MAKING IT STICK:

The Assignment Matrix

You've broken down your process into tasks. You've grouped them into roles. You've written SOPs for the complex stuff. Now you need a simple reference document that shows who owns what.

Create a responsibility assignment matrix. You can use RACI (Responsible, Accountable, Consulted, Informed) or a simpler version. Here's what it looks like for snow removal:

Task |

Maintenance Coordinator |

Facilities Director |

Store Operations |

Monitor weather forecasts daily |

Responsible |

Informed |

- |

Decide when to dispatch vendors |

Responsible |

Consulted (borderline cases) |

- |

Authorize dispatch execution |

Responsible |

- |

- |

Approve premium/emergency rates |

Consulted |

Responsible |

|

Contact vendors and confirm availability |

Responsible |

- |

- |

Log dispatch information in CMMS |

Responsible |

- |

- |

Confirm vendor arrival on-site |

Responsible |

- |

Consulted (if site access needed) |

Track vendor no-shows |

Responsible |

Informed |

- |

Initiate backup vendor escalation |

Responsible |

Informed immediately |

- |

Verify completion photos |

Responsible |

- |

- |

Conduct post-storm quality checks |

Responsible |

- |

Informed (if issues found) |

Document damage or service issues |

Responsible |

Informed |

Informed |

Communicate storm updates to operations |

Responsible |

- |

Informed |

Review seasonal vendor performance |

Consulted |

Responsible |

- |

Make contract renewal decisions |

Consulted |

Responsible |

- |

Responsible = The person who does the work Accountable = The person who owns the outcome (often same as Responsible for individual tasks) Consulted = People whose input is needed before action Informed = People who need to know after action

You can simplify this if RACI feels like overkill. The key is: for every task, one role is clearly responsible. Not "we all handle it." One role owns it.

This matrix becomes your training document. When you hire a new maintenance coordinator, this shows them exactly what they own. When your facilities director goes on vacation, whoever's covering knows which decisions they need to make. When store operations asks "who do I talk to about the plow not showing up," this matrix tells them it's the maintenance coordinator.

What You Have Now And What's Still Missing

At this point, you've gone from scattered tribal knowledge to documented process to clear ownership. You have:

1. A complete process document that describes your snow removal operation end-to-end

2. Specific task assignments for each role involved

3. SOPs for the complex tasks that require judgment or have multiple steps

4. A responsibility matrix that makes ownership crystal clear

Someone new could join your team, read these documents, and start managing snow removal within a week instead of "after they go through a winter."

But here's what you still don't have: proof that it's working. You have assignments, but do you know if people are executing them? You have SOPs, but are they being followed? You have documented standards, but are vendors actually meeting them?

That's Part 4. How do you measure performance so you know your process is working—or where it's breaking down?

THE PROBLEM:

"Did We Get Complaints?" Is Not a Performance Metric

You've built the process. You've assigned ownership. You've created SOPs. The first major storm hits, and everything feels smooth. Vendors showed up. Lots got plowed. No angry calls from store managers. Success, right?

Three months later when you're reviewing vendor contracts for next season, you realize you have no idea which vendors actually performed well. You think Jim's Plowing was "pretty reliable," but you can't prove they responded within your SLA 40% of the time. You're pretty sure your Northeast vendors were more expensive than Midwest, but you don't have cost-per-location data to confirm it. One of your coordinators seemed overwhelmed during storms, but you can't point to specific metrics showing where they struggled.

So you renew contracts based on gut feel, keep the same vendor assignments, and hope next season goes better. That's not management. That's guessing with a bigger budget.

Here's what happens when you manage snow removal without metrics:

You can't hold vendors accountable objectively. "You guys were late a lot" doesn't hold up when a vendor replies "we hit 95% of our target times." Were they late? You don't know. You didn't track arrival times.

You can't make informed contract decisions. Should you go with per-push pricing or seasonal flat rate next year? Depends on how many events you had and what your per-location costs ran. If you don't have that data, you're negotiating blind.

You can't identify if your coordinator is struggling or if your vendor is unreliable. Work orders close late is that because your coordinator is slow to verify completion, or because vendors aren't submitting documentation? Without metrics, you can't tell the difference.

You can't justify budget requests. When finance asks why you need 15% more for snow removal next year, "it just costs more now" doesn't work. "We had 12% more storm events and vendor rates increased 8% while our cost per location only rose 6% due to better vendor management" works.

Real example:

A facilities team managing 150 locations thought they had solid vendor relationships. When they finally started tracking metrics mid-season, they discovered one vendor was arriving 3+ hours after dispatch 60% of the time triggering emergency backup vendor calls at premium rates. They'd been paying double for 60% of that vendor's territory because nobody was measuring response time. Switching vendors mid-season saved them $40,000 for the rest of winter.

The Framework: Leading vs. Lagging Indicators

Before we get into specific metrics, you need to understand the difference between indicators that tell you what already happened versus indicators that tell you what's about to happen.

Lagging indicators measure outcomes after they occur:

-

Customer complaints about unplowed lots

-

Safety incidents from icy walkways

-

Total seasonal spend on snow removal

-

Vendor invoice disputes

These matter. You need to track them. But they tell you about problems after damage is done. A customer already slipped. A store was already inaccessible during morning rush. Money was already spent.

Leading indicators measure performance while you still have time to fix problems:

-

Vendor response time from dispatch to arrival

-

Completion photo submission rate within 4 hours

-

Forecast monitoring consistency (daily checks happening or skipped?)

-

Backup vendor activation frequency

These metrics tell you that something's going wrong before it becomes a crisis. Vendor response times creeping from 90 minutes to 2+ hours? That's a problem you can address before it causes store closures. Completion photo rates dropping below 90%? That's a documentation issue you can fix before you lose vendor accountability.

Snow removal is unique because the season is short and the stakes are high. You get maybe 10-20 storm events per winter depending on region. That's not many opportunities to course-correct. Your metrics need to tell you fast when something's not working.

Your KPI framework should answer three questions:

1. Are we responding fast enough? (Time from weather event to completed service)

2. Are we completing work to standard? (Quality and documentation compliance)

3. Are we managing costs effectively? (Budget performance and efficiency)

If you can answer those three questions with data instead of opinions, you're ahead of 90% of facilities operations.

Essential KPIs Every Snow Removal Operation Should Track

These are the non-negotiable metrics. Regardless of your size, region, or vendor structure, you should track these. I'll tell you what to measure, why it matters, how to measure it, and what good performance looks like.

Response and Execution Metrics

1. Vendor Response Time (Dispatch to On-Site Arrival)

What it measures: Time from when you dispatch a vendor to when they confirm arrival on-site and begin work.

Why it matters: This separates your dispatch speed from vendor reliability. If you dispatch fast but vendors take 4 hours to arrive, you know where the problem is. This is also where premium emergency rates get triggered—slow response from primary vendors forces you to call backups at higher cost.

How to measure:

-

Log timestamp when coordinator dispatches vendor in CMMS

-

Log timestamp when vendor confirms on-site arrival

-

Calculate elapsed time

-

Track by vendor, by region, by storm severity

Target benchmark:

-

Standard storm conditions: Under 2 hours average

-

Emergency/major storm conditions: Under 1 hour average

-

Any response over 3 hours should trigger escalation protocol

What the data tells you:

-

One vendor consistently over 2 hours? Reliability problem—address or replace.

-

All vendors slow during specific storms? Capacity problem—you need more backup vendors for major events.

-

Response times increasing over the season? Vendor fatigue or overcommitment—they're taking too many clients.

2. Work Order Completion Rate During Storm Windows

What it measures: Percentage of dispatched locations that receive completed service within your defined service window.

Why it matters: Tells you if vendors can actually handle your volume when storms hit multiple regions simultaneously. A vendor who completes 95% of work orders during light snow but only 70% during major storms can't scale with your needs.

How to measure:

-

Track total locations dispatched per storm event

-

Track locations with confirmed completed service within service window (typically 4-6 hours for standard storms, 12 hours for major events)

-

Calculate completion rate: (Completed locations ÷ Dispatched locations) × 100

Target benchmark:

-

Standard storms (2-4 inches): 95%+ completion within service window

-

Major storms (4-8 inches): 90%+ completion within extended window

-

Extreme events (8+ inches): 85%+ completion, with clear communication on delays

What the data tells you:

-

Completion rates dropping below 90%? Vendor capacity issues or dispatch timing problems.

-

Specific locations consistently incomplete? Site access issues or vendor routing problems.

-

Completion rates high but quality complaints also high? They're rushing you need better quality standards.

3. Service Quality Verification Rate

What it measures: Percentage of completed work orders with required documentation (photos, timestamps, service notes) that meet your defined quality standards.

Why it matters: This is the difference between "vendor says it's done" and "we verified it's actually done correctly." Without documentation, you have no evidence for quality issues, no proof for contract disputes, and no way to hold anyone accountable.

How to measure:

-

Track total work orders marked complete by vendors

-

Track work orders with required photo documentation submitted within 4 hours

-

Track work orders where photos meet quality standards (proper areas cleared, salt applied, no obvious gaps)

-

Calculate verification rate: (Properly documented completions ÷ Total completions) × 100

Target benchmark:

-

100% of completed work orders should have required documentation

-

Zero tolerance for missing photos or incomplete documentation

-

This is a yes/no metric either it's documented to standard or the work order isn't actually complete

What the data tells you:

-

Verification rate below 100%? Your vendors aren't following requirements or your coordinator isn't enforcing them.

-

Specific vendor consistently low documentation? They don't take your standards seriously address immediately.

-

Documentation submitted but quality poor? Need better photo requirements and rejection criteria.

Cost and Efficiency Metrics

4. Cost Per Location Per Event

What it measures: Average cost to service one location during one snow event.

Why it matters: Shows cost trends across storms and seasons. Helps you compare vendor pricing, identify cost spikes, and forecast budgets. Essential for deciding between seasonal contracts vs. per-push pricing.

How to measure:

-

Track total vendor invoices per storm event

-

Divide by number of locations serviced

-

Trend over time by vendor, region, storm severity

-

Example: $8,500 total cost ÷ 42 locations = $202 per location

Target benchmark:

-

Varies significantly by region, lot size, and storm severity

-

More useful as a trend than absolute number

-

Cost per location increasing over season? Vendor pricing changes or more emergency calls.

-

Cost per location varies widely between vendors for similar locations? Pricing efficiency issue.

What the data tells you:

-

Sudden cost spike for specific event? Check if emergency rates were triggered unnecessarily.

-

One vendor significantly more expensive per location? Renegotiate or rebid.

-

Seasonal flat rate came out cheaper than per-push? Change contract structure next year.

5. Emergency/Premium Rate Frequency

What it measures: Percentage of total vendor dispatches that occurred at emergency or premium rates instead of standard contracted rates.

Why it matters: Emergency rates typically cost 1.5x to 2x standard rates. If you're hitting emergency rates frequently, you're either not pre-positioning vendors properly, your primary vendors are unreliable, or your dispatch triggers are wrong. This metric catches expensive process failures.

How to measure:

-

Track total dispatch events across all vendors

-

Track how many were billed at emergency/premium rates

-

Calculate frequency: (Emergency rate dispatches ÷ Total dispatches) × 100

-

Also track by vendor if one vendor triggers emergency rates frequently, that's a vendor problem not a process problem

Target benchmark:

-

Less than 10% of total dispatches should be at emergency rates

-

Truly unpredictable events (forecast changed dramatically, vendor emergency cancellation) justify emergency rates

-

Consistent emergency rate usage means your standard process is broken

What the data tells you:

-

Emergency rate frequency above 15%? You're not pre-positioning vendors or monitoring forecasts effectively.

-

Emergency rates clustered with specific vendor? That vendor is unreliable they're not managing their capacity.

-

Emergency rates only during extreme events? That's appropriate you're using the escalation path correctly.

6. Budget Variance by Region/Vendor

What it measures: Difference between forecasted seasonal spend and actual spend, broken down by region and vendor.

Why it matters: Identifies which vendors or regions are running over budget and why. Essential for next year's budget planning and contract negotiations. If you don't track variance, you have no idea if your $150K snow removal budget was realistic or if you're consistently 30% over.

How to measure:

-

Set seasonal budget forecast at beginning of winter (based on historical average storm frequency and contracted rates)

-

Track actual spend throughout season by vendor and region

-

Calculate variance: ((Actual spend - Forecasted spend) ÷ Forecasted spend) × 100

-

Review mid-season and end-of-season

Target benchmark:

-

±15% variance is reasonable (weather is unpredictable)

-

Consistent 30%+ overruns? Budget forecasting is unrealistic or vendor costs are out of control

-

Significant underruns? You over-forecasted or had a mild winter adjust next year's budget accordingly

What the data tells you:

-

Midwest region 40% over budget but Northeast on target? Regional weather was worse than forecast or Midwest vendor rates are too high.

-

All regions over budget by similar percentage? Winter was harsher than forecast not a process problem.

-

Specific vendor significantly over their forecast? Billing issues or they're padding invoices audit their work orders.

Accountability and Process Metrics

7. Vendor No-Show or Incomplete Service Rate

What it measures: Percentage of dispatched work orders where vendor failed to arrive, arrived but didn't complete service, or service was so poor it required re-work.

Why it matters: Direct measure of vendor reliability. Every no-show or incomplete service forces you into emergency escalation mode backup vendors, premium rates, store closures. This metric separates good vendors from vendors who just talk a good game.

How to measure:

-

Track total work orders dispatched to each vendor

-

Track work orders where vendor never arrived (no-show)

-

Track work orders where vendor arrived but service was incomplete (didn't finish, major areas missed, no salt applied, etc.)

-

Calculate rate: ((No-shows + Incomplete) ÷ Total dispatched) × 100

Target benchmark:

-

Under 5% total failure rate across all vendors

-

Any single vendor above 10% failure rate is unacceptable

-

Failure rate should trend toward zero as you enforce accountability

What the data tells you:

-

New vendor with high failure rate early season but improving? Learning curve monitor closely.

-

Established vendor with increasing failure rate? They're overcommitted or losing capacity address immediately.

-

Failure rate spikes during major storms only? Vendor can't handle volume need additional backup vendors.

8. Escalation Frequency

What it measures: How often you have to activate backup vendors, emergency protocols, or escalate decisions up the chain because primary process broke down.

Why it matter: Shows if your standard process is working or if you're constantly operating in crisis mode. High escalation frequency means something in your primary process is broken bad vendor assignments, wrong dispatch triggers, unclear decision authority, or unreliable vendors.

How to measure:

-

Track total storm events

-

Track events requiring backup vendor activation, emergency approvals outside normal authority, or process exceptions

-

Calculate frequency: (Events requiring escalation ÷ Total storm events) × 100

-

Also track reason for escalation (vendor no-show, forecast change, equipment failure, etc.)

Target benchmark:

-

Less than 10% of storm events should require escalation

-

Occasional escalation during truly unusual circumstances (10+ inch storm, vendor equipment breakdown) is normal

-

Frequent escalation means your standard process can't handle standard conditions

What the data tells you:

-

Escalations always for same vendor? Vendor reliability problem.

-

Escalations always during morning rush timing? Dispatch trigger timing needs adjustment.

-

Escalations requiring emergency approvals? Decision authority isn't clear enough in your process.

9. Documentation Completion Rate

What it measures: Percentage of work orders with all required documentation submitted by both vendors (photos, service notes, timestamps) and coordinators (quality verification, issue logging).

Why it matters: Documentation is your entire accountability system. Without complete documentation, you can't prove service quality, can't dispute vendor invoices, can't track performance trends, and can't make data-driven decisions. If documentation isn't at 100%, your other metrics are unreliable.

How to measure:

-

Define required documentation elements (before/after photos, service timestamp, areas serviced, salt application, issues encountered, coordinator quality verification)

-

Track work orders with complete documentation vs. total work orders

-

Calculate completion rate: (Fully documented work orders ÷ Total work orders) × 100

-

Track separately for vendor documentation and coordinator verification

Target benchmark:

-

100% documentation completion, non-negotiable

-

If you accept incomplete documentation, you've trained everyone that standards are optional

-

This is a process discipline metric it's either happening consistently or it's not happening at all

What the data tells you:

-

Documentation completion below 95%? Either vendors don't understand requirements or coordinators aren't enforcing them.

-

Vendor documentation high but coordinator verification low? Your coordinator is overwhelmed or skipping quality control.

-

Documentation completion drops during busy storm periods? You need better CMMS workflows or additional coordination capacity.

Teaching You to Find Your Own KPIs: The Pain Point Method

The nine metrics above are essential for any multi-location snow removal operation. But your operation has unique challenges that might need unique metrics. Here's how to identify what else you should track.

Start with your biggest pain points from last season. Don't make up metrics that sound sophisticated. Track the specific problems that cost you money, time, or sleep.

Pain point: "We could never get straight answers about whether vendors actually showed up or if store managers were just impatient."

Metric to track: Vendor arrival confirmation time (when coordinator dispatched vs. when vendor confirmed on-site arrival). If vendors confirm arrival but managers still complain, the problem is communication not vendors. If vendors aren't confirming arrival consistently, that's a vendor accountability issue.

Pain point: "We blew our budget by 40% and couldn't figure out why until we got all the invoices in March."

Metrics to track: Real-time cost tracking per storm event, emergency rate frequency, cost per location trending. Review these weekly during active season, not after winter ends.

Pain point: "Store managers kept calling us saying lots weren't fully cleared, but vendors swore they did complete service."

Metrics to track: Service quality verification rate (photo documentation), specific completion criteria checklist (fuel islands cleared Y/N, walkways salted Y/N, snow dumped in approved areas Y/N). Quality disputes drop to near-zero when you have photo evidence and specific checklists.

Pain point: "Our new coordinator was completely overwhelmed during storms and we had no idea what they were struggling with."

Metrics to track: Time from dispatch to vendor confirmation, documentation completion rate, escalation frequency by coordinator. These metrics show whether the coordinator is slow at dispatching, struggling with quality verification, or unclear on when to escalate. Different problems need different solutions.

Map Metrics to Your Five Questions

Remember Part 1? Your five questions weren't arbitrary—they were the foundation of a working process. Your metrics should tell you if you're actually executing against those questions.

Question 1: Do you have a documented process?

Metric: Documentation completion rate. If work orders aren't documented consistently, your process isn't being followed.

Question 2: Can you define what "plowed and salted" means?

Metric: Service quality verification rate. If you can't verify that standards were met, your standards aren't clear enough.

Question 3: Who owns what when weather hits?

Metric: Escalation frequency. High escalation means role clarity is poor—people don't know what decisions they can make without asking permission.

Question 4: How do you onboard someone into your snow operations?

Metric: Time to independent operation for new coordinators. Track how many storms it takes before a new coordinator can manage independently without constant oversight. If it's more than 3-4 storms, your process documentation and SOPs aren't good enough.

Question 5: What does "completed successfully" look like?

Metrics: Vendor no-show rate, completion rate within service windows, quality verification rate. These metrics directly measure if work is actually getting completed to standard.

This is the connection most operations miss: your metrics should validate that your process is working. If your process says "coordinators verify photo documentation within 4 hours" but your documentation completion metric shows 70% compliance, your process exists on paper but not in reality.

Every KPI Should Drive a Decision or Action

Here's the test for whether a metric is actually useful: if this number is bad, what specifically would we change?

If you can't answer that question, it's not a KPI it's trivia.

"Vendor response time is averaging 3.5 hours."

Decision it drives: Switch to backup vendor for that region, or renegotiate SLA with current vendor including financial penalties for late response.

"Emergency rate frequency is 25%."

Decision it drives: Adjust dispatch triggers to call vendors earlier, improve forecast monitoring process, or replace primary vendors who aren't managing their capacity properly.

"Cost per location in Midwest region is $380, Northeast is $195."

Decision it drives: Investigate Midwest pricing (are lots larger? More complex? Or is vendor just expensive?), potentially rebid Midwest contracts, or adjust budget allocation by region.

"Documentation completion rate is 68%."

Decision it drives: Implement required photo uploads before work orders can close in CMMS, retrain vendors on documentation standards, or add coordinator capacity if they're too overwhelmed to verify properly.

Metrics that don't drive decisions are just numbers you report because someone told you to track them. Useful metrics tell you what's broken and what to fix.

Making Metrics Operational: From Data to Action

You now know what to measure. The hard part isn't identifying metrics, it's actually tracking them consistently and using them to make decisions before spring arrives.

KPIs are useless if nobody looks at them until the season ends and it's time to review vendor contracts. Here's how to make metrics operational during the season when they can actually change outcomes.

Build a Simple Dashboard or Tracking Sheet

You don't need fancy business intelligence software. You need a simple place where metrics are updated consistently and visible to everyone who needs them.

Minimum viable dashboard structure:

Storm Event Summary (updated after each storm):

-

Date and accumulation

-

Locations dispatched

-

Completion rate

-

Average vendor response time

-

Emergency rate calls (Y/N and why)

-

Total cost and cost per location

-

Issues encountered

Vendor Performance Summary (running totals):

-

Total dispatches per vendor

-

Average response time per vendor

-

Completion rate per vendor

-

No-show or incomplete service count

-

Documentation compliance rate

-

Total cost and cost per location per vendor

Process Compliance Summary (running totals):

-

Documentation completion rate overall

-

Escalation frequency

-

Budget variance (actual vs. forecast)

This can be a Google Sheet, an Excel file, a CMMS report—whatever you'll actually keep updated. The format doesn't matter. Consistency does.

Review Cadence: When to Look at Which Metrics

During active storm (real-time):

-

Vendor response times (are they arriving as expected?)

-

Completion tracking (which locations are done, which are pending?)

-

Escalation needs (do we need to activate backup vendors?)

You're not doing analysis during a storm. You're monitoring execution.

Week after storm (post-event review):

-

Final completion rates for that event

-

Cost analysis (actual vs. expected for that storm severity)

-

Quality verification (photo documentation review, any complaints or issues)

-

Vendor performance summary for that specific event

This is when you catch problems while they're still fresh. If vendor response time was poor during this storm, address it before the next storm hits.

Monthly during active season:

-

Trend analysis (are metrics improving, stable, or declining?)

-

Budget variance review (are we tracking to forecast or running over?)

-

Vendor performance comparison (which vendors are performing, which need attention?)

This is your course-correction checkpoint. Mid-season is when you can still make changes—switch vendors, adjust processes, retrain coordinators.

End of season (contract decision review):

-

Complete vendor performance scorecards

-

Total seasonal cost analysis

-

ROI on seasonal contracts vs. per-push pricing

-

Process effectiveness review (what worked, what didn't, what changes for next year)

This is when you make contract renewal decisions, budget next year, and update your process based on what metrics revealed.

Who Owns Reporting Each Metric

Tie this back to your responsibility matrix from Part 3. Every metric needs an owner who's responsible for tracking it and reporting it.

Maintenance Coordinator owns:

-

Vendor response time logging (they're dispatching and confirming arrival)

-

Documentation completion tracking (they're verifying photos and closing work orders)

-

Real-time storm monitoring (completion rates, vendor status)

Facilities Director owns:

-

Cost analysis and budget variance (they're approving invoices and managing budgets)

-

Vendor performance scorecards (they're making contract decisions)

-

Process effectiveness review (they're responsible for process outcomes)

Don't create metrics that nobody owns. If "someone should be tracking cost per location," that means nobody will actually track it.

Examples: How KPIs Change Behavior

Metrics only matter if they actually change what people do. Here's what happens when you track performance instead of guessing.

Example 1: Response Time Metric Reveals Vendor Reliability Problem

A facilities team managing 200+ locations across the Midwest tracked vendor response time for the first time. They'd been using the same primary vendor for three years and assumed the relationship was solid.

Data showed: Average response time was 3.2 hours. But when they broke it down by storm event, they discovered the vendor was under 2 hours for the first few storms, then climbed to 4+ hours consistently by mid-season. The vendor was taking on too many clients and prioritizing other accounts over them.

Action taken: Mid-season conversation with vendor either commit to 2-hour response or lose the contract. Vendor couldn't commit. They switched to backup vendor immediately, who averaged 1.5 hours for the rest of the season. Emergency rate activation dropped from 30% to under 5% because the new vendor was reliable.

Impact: Saved approximately $35,000 in emergency rate calls for the remainder of the season. More importantly, stores weren't inaccessible for 4+ hours during storms.

Example 2: Documentation Rate Reveals Process Shortcut

A maintenance coordinator was closing work orders quickly great productivity numbers. But the facilities director noticed increasing quality complaints from store managers about incomplete service.

When they started tracking documentation completion rate, they discovered only 60% of work orders had required completion photos. The coordinator was marking work orders complete based on vendor text messages ("all done at site 47") without photo verification.

Action taken: Changed CMMS workflow so work orders couldn't close without photo uploads. Retrained coordinator on why verification matters it's not busywork, it's the only way to hold vendors accountable. Within two weeks, documentation rate was 98%.

Impact: Quality complaints dropped to near zero because vendors knew their work would be verified. Also caught one vendor who was consistently cutting corners they weren't salting walkways, just plowing lots. That vendor was replaced.

Example 3: Cost Per Location Tracking Reveals Site Map Problem

A chain tracking cost per location noticed one region was 40% more expensive than others $280 per location vs. $195 average. Initially assumed it was just regional pricing differences or larger lots.

When they investigated, they discovered site maps for that region were outdated. Vendors were plowing areas that didn't need service (old parking sections that were now landscaping) and missing priority areas (new fuel island expansion). Vendors were doing more work than necessary and still leaving critical areas unserviced.

Action taken: Updated site maps for entire region with current layouts, no-plow zones, and priority areas. Sent updated maps to vendors with revised service specifications.

Impact: Cost per location dropped to $210 (25% reduction) because vendors weren't plowing unnecessary areas. Service quality improved because vendors now had clear guidance on what actually needed to be done.

From Guessing to Knowing

You started Part 1 asking five questions about your snow removal operation. By Part 4, you're not just answering those questions you're proving the answers with data.

The teams that control snow season instead of surviving it all do four things:

1. Answer the five questions before the first storm (Part 1) - They know what their process should be

2. Document their process clearly (Part 2) - They turn tribal knowledge into usable documentation

3. Assign clear ownership with SOPs (Part 3) - They make sure someone actually executes the process

4. Track performance with real metrics (Part 4) - They know if it's working or where it's breaking down

Most operations do one or two of these. Maybe three if they're disciplined. Almost none do all four.

The difference between chaos and control isn't luck. It's not vendor relationships or budget size. It's having a clear process, clear ownership, and clear metrics that tell you if you're executing.

Winter is coming. The forecast will show snow. Your vendors will get calls from dozens of operations at the same time. The ones with clear processes, clear expectations, and clear accountability will get serviced first. The ones scrambling with last-minute calls and vague instructions will get serviced eventually—maybe.

Which operation will you be?

Start This Week, Not When You See Snow

Don't wait for the first forecast to start building this system. Here's your action plan:

Week one:

-

Gather your team and work through the five questions in Part 1

-

Record the conversation or write down your answers

-

Identify the biggest gap in your current process

Week two:

-

Use the AI prompt from Part 2 to turn your answers into a process document

-

Review and refine the output with your team

-

Identify which tasks need detailed SOPs

Week three:

-

Create your responsibility matrix from Part 3

-

Assign clear ownership for every task

-

Write SOPs for your three most complex or judgment-heavy tasks

Week four:

-

Set up your metrics dashboard from Part 4

-

Define your target benchmarks for each essential KPI

-

Decide who owns tracking and reporting each metric